Parking Slot Detection

Detecting the parking slot occupancy can help us by providing the exact location of the place which is empty for parking and save our time. For this purpose, we can use Image Detection to get the location of the parking space which is empty for parking. Abstract:Parking slot detection algorithms using visual sensors have been required for various automated parking assistant systems. In most previous studies, popular feature detectors, such as the Harris corner or the Hough line detector, have been employed for detecting parking slots. Display unit is installed on entrance of parking lot which will show LEDs for all Parking slot and for all parking lanes. Empty slot is indicated by the respective glowing LED. Working of the circuit and image of the model. IR Sensors are placed inside the parking slot to detect the presence of the vehicle in slot.

Parking Slot Detection Device

- Intranode occupancy detection: once the algorithm has computed values regarding the occupancy for each parking slot (corresponding to the RoIs), an intranode occupancy detection process occurs. In order to avoid transitory events (e.g., people crossing by and shadows casted by external objects), the occupancy status becomes effective and is.

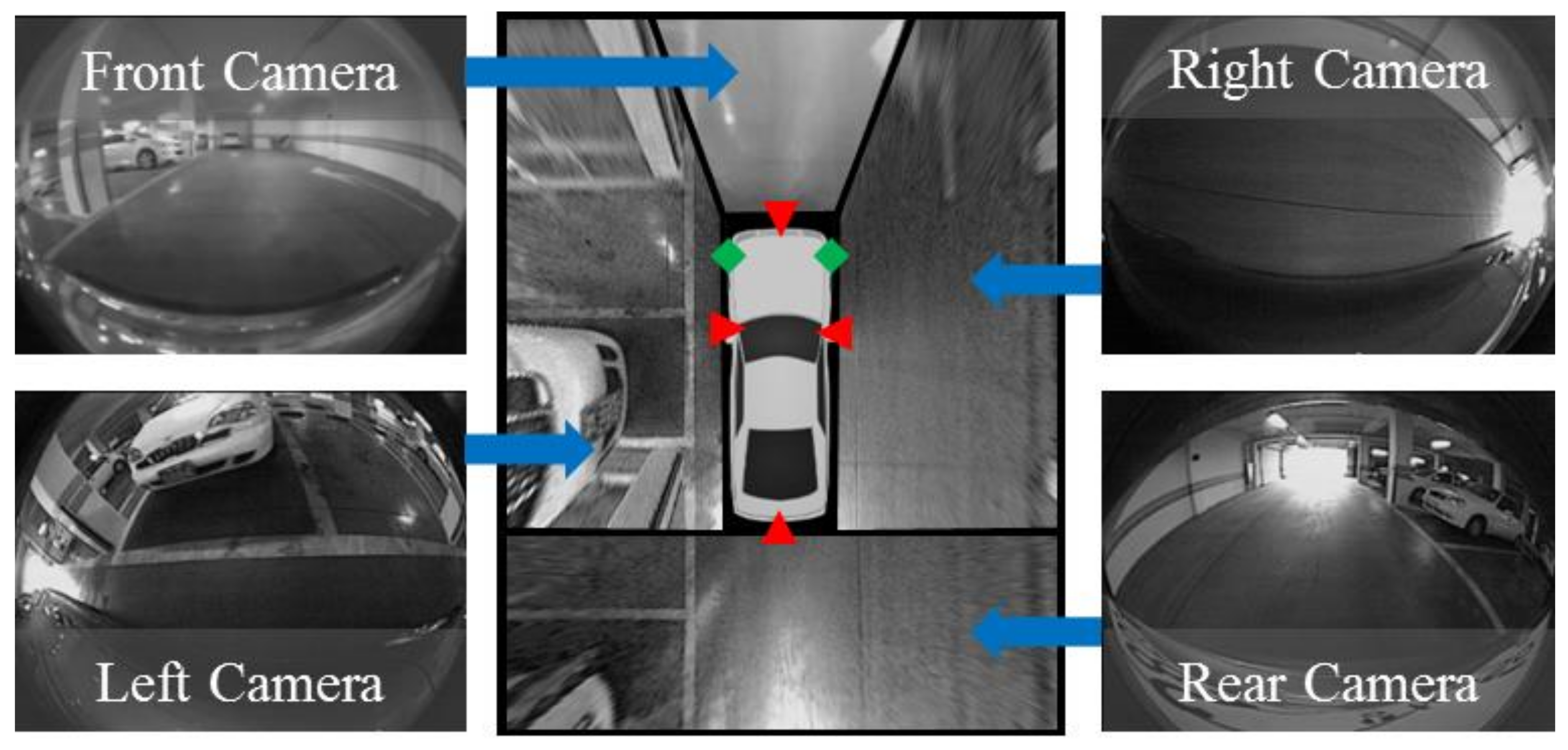

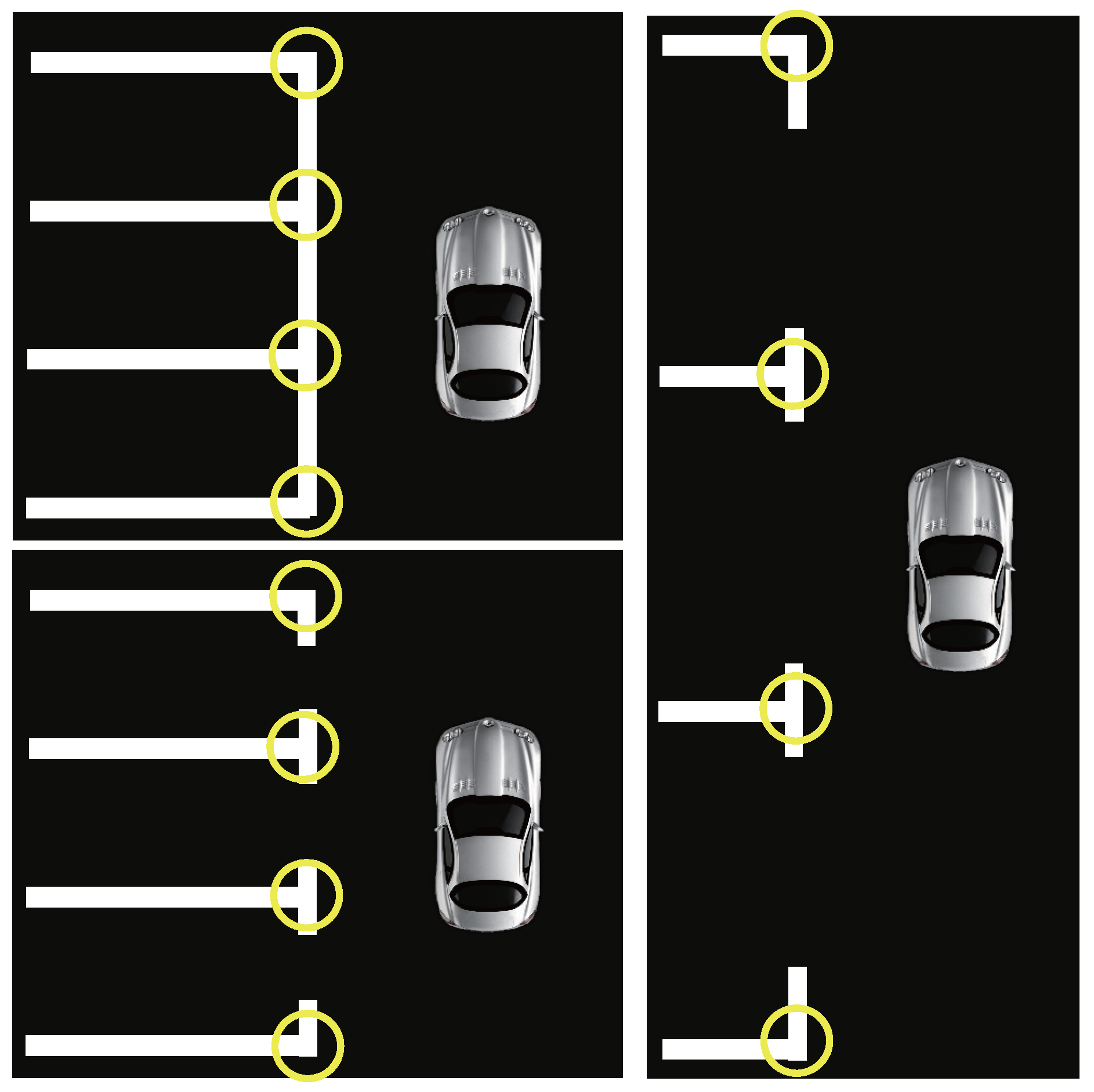

- Parking-slot detection algorithms. Secondly, a learning-based parking-slot detection approach, namely PSDL, is proposed. Using PSDL, given a surround-view image, the marking-points will be detected first and then the valid parking-slots can be inferred. The efficacy and efficiency of PSDL have been corroborated on our database.

In-ground sensors

Smart Parking’s unassuming yet highly innovative in-ground sensors monitor individual parking spaces and relay occupancy status to our SmartSpot gateways, which in turn send this live status information to the SmartCloud platform, allowing real-time parking information to be viewed on multiple devices.

We ensure that each sensor meets our rigorous functional requirements before installation, meaning they can operate under a wide range of operational environments with high accuracy, and we can tailor sensor behaviour to individual parking space requirements.

All of Smart Parking’s systems are based around a modular approach so we’re able to offer bay monitoring, car counting systems, fixed and mobile automatic number plate recognition (ANPR), guidance signage and more to a wide range of large-scale sites.

Surface mount sensors

Smart Parking also offers the option of surface-mount sensors for exposed sites – such as thin surfaces covering cabling and services, membranes, roof tops and wharfs – where core drilling into the ground may not be an option. These sensors work exactly like their in-ground counterparts, and can also be fitted with a fluro collar for better visibility in areas where there is higher foot traffic.

Dataset Download

Directional Dbscan

You can download CNRPark+EXT using the following links:

Parking Slot Detection Github

CNRPark+EXT.csv (18.1 MB)

CSV collecting metadata for each patch of both CNRPark and CNR-EXT datasets

CNRPark-Patches-150x150.zip (36.6 MB)

segmented images (patches) of parking spaces belonging to the CNRPark preliminary subset.

Files follow this organization:<CAMERA>/<CLASS>/YYYYMMDD_HHMM_<SLOT_ID>.jpg, where:<CAMERA>can beAorB,<CLASS>can befreeorbusy,YYYYMMDD_HHMMis the zero-padded 24-hour capture datetime,<SLOT_ID>is a local ID given to the slot for that particular camera

E.g:

A/busy/20150703_1425_32.jpgCNR-EXT-Patches-150x150.zip (449.5 MB)

segmented images (patches) of parking spaces belonging to the CNR-EXT subset.

Files follow this organization:PATCHES/<WEATHER>/<CAPTURE_DATE>/camera<CAM_ID>/<W_ID>_<CAPTURE_DATE>_<CAPTURE_TIME>_C0<CAM_ID>_<SLOT_ID>.jpg,

where:<WEATHER>can beSUNNY,OVERCASTorRAINY,<CAPTURE_DATE>is the zero-paddedYYYY-MM-DDformatted capture date,<CAM_ID>is the number of the camera, ranging1-9,<W_ID>is a weather identifier, that can beS,OorR,<CAPTURE_TIME>is the zero-padded 24-hourHH.MMformatted capture time,<SLOT_ID>is a global ID given to the monitored slot; this can be used to uniquely identify a slot in the CNR-EXT dataset.

E.g:

PATCHES/SUNNY/2015-11-22/camera6/S_2015-11-22_09.47_C06_205.jpgThe

LABELSfolder contains a list file for each split of the dataset used in our experiments. Each line in list files follow this format:<IMAGE_PATH> <LABEL>, where:<IMAGE_PATH>is the path to a slot image,<LABEL>is0forfree,1forbusy.

CNR-EXT_FULL_IMAGE_1000x750.tar (1.1 GB)

full frames of the cameras belonging to the CNR-EXT subset. Images have been downsampled from 2592x1944 to 1000x750 due to privacy issues.

Files follow this organization:FULL_IMAGE_1000x750/<WEATHER>/<CAPTURE_DATE>/camera<CAM_ID>/<CAPTURE_DATE>_<CAPTURE_TIME>.jpg,

where:<WEATHER>can beSUNNY,OVERCASTorRAINY,<CAPTURE_DATE>is the zero-paddedYYYY-MM-DDformatted capture date,<CAM_ID>is the number of the camera, ranging1-9,<CAPTURE_TIME>is the zero-padded 24-hourHHMMformatted capture time.

The archive contains also 9 CSV files (one per camera) containing the bounding boxes of each parking space with which patches have been segmented. Pixel coordinates of the bouding boxes refer to the 2592x1944 version of the image and need to be rescaled to match the 1000x750 version.

splits.zip (27.2 MB)

all the splits used in our experiments. Those splits combine our datasets and also third-party datasets (such as PKLot).